KAIST Multispectral Pedestrian Detection Benchmark

Leaderboard

Due to the confusion caused by original paper, people sometimes reported numbers in different evaluation settings. In this regard, people from Sejong University(Jiwon Kim, Hyeongjun Kim, Tae-Joo Kim and Yukyung Choi) created a leaderboard to facilitate fair comparison. So please use this leaderboard for your research.

Introduction

By Soonmin Hwang, Jaesik Park, Namil Kim, Yukyung Choi, In So Kweon at RCV Lab. (KAIST) [Website]

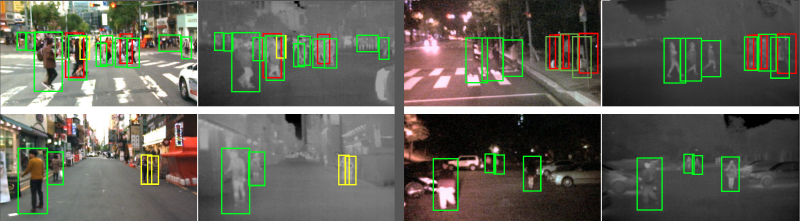

We developed imaging hardware consisting of a color camera, a thermal camera and a beam splitter to capture the aligned multispectral (RGB color + Thermal) images. With this hardware, we captured various regular traffic scenes at day and night time to consider changes in light conditions.

The KAIST Multispectral Pedestrian Dataset consists of 95k color-thermal pairs (640x480, 20Hz) taken from a vehicle. All the pairs are manually annotated (person, people, cyclist) for the total of 103,128 dense annotations and 1,182 unique pedestrians. The annotation includes temporal correspondence between bounding boxes like Caltech Pedestrian Dataset. More infomation can be found in our CVPR 2015 [paper] [Ext. Abstract].

Download

You can install gdown via pip:

pip install gdown

Preview set (1.44GB)

You can find demo code to parse labels and draw boxes on the pair of images.

gdown 11nhHpmuh2FUjrLNfGs51R2Mqqy1GTjY8

Full set (36.32GB)

gdown 1sBcAmFqNJmNMBZdMtKmO2X4BRjKPyKMc

Integrity Verification

To ensure your download is complete and hasn’t been corrupted, we provide an MD5 checksum file.

1. Download MD5 file

You can download the checksum file along with the dataset:

- Preview set (kaist-cvpr15-preview.tar)

gdown 1nJkdnSI9fAuhhZPKFfhVXimXGzvuRTb6 - Full set (kaist-cvpr15.tar)

gdown 1KQ9lZXX9nfZuhbqBUEdlU2Yjx3nzeg5c

2. Verify Automatically (Recommended)

If you have both kaist-cvpr15.tar and kaist-cvpr15.tar.md5 in the same directory, run the following command:

- Linux / WSL:

md5sum -c kaist-cvpr15.tar.md5 - macOS:

# Check manually or use md5sum (if installed via brew) FILE="kaist-cvpr15.tar"; md5 -q $FILE | grep -qf - $FILE.md5 && echo "$FILE: OK"

Preview videos (low-quality)

Toolbox

This repository includes an extension of Piotr’s Computer Vision Matlab Toolbox.

We modify some codes to deal with 4-ch RGB+T images, e.g. ${PIOTR_TOOLBOX}/channels/chnsCompute.m.

All the modifications are in libs/.

Experimental results

Many researchers struggle to improve pedestrian detection performance on our benchmark. If you are interested, please see these works.

- FusionRPN + BDT [CVPR ‘17]: 29.83%

- Halfway Fusion [BMVC ‘16]: 36.22%

- LateFusion CNN [ESANN ‘16]: 43.80%

- CMT-CNN [CVPR ‘17]: 49.55%

- Baseline, ACF+T+THOG [CVPR ‘15]: 54.40%

Also, another researches to employ multi-modality are presented.

- Image-to-image translation [Arxiv ‘17]

- Calibrations

Related benchmarks

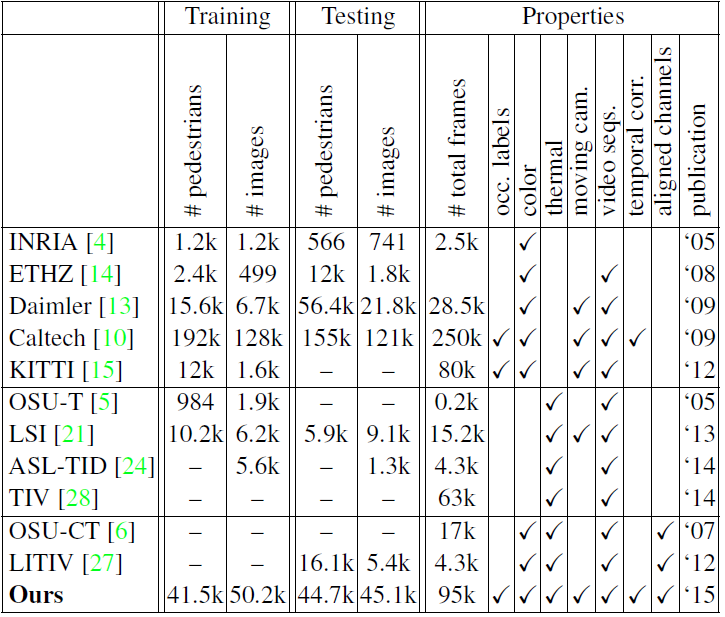

The horizontal lines divide the image types of the dataset (color, thermal and color-thermal). Note that our dataset is largest color-thermal dataset providing occlusion labels and temporal correspondences captured in a non-static traffic scenes.

Please see our Place Recognition Benchmark. [Link]

License

Please see LICENSE.md for more details.

Citation

If you use our extended toolbox or dataset in your research, please consider citing:

@inproceedings{hwang2015multispectral,

Author = {Soonmin Hwang and Jaesik Park and Namil Kim and Yukyung Choi and In So Kweon},

Title = {Multispectral Pedestrian Detection: Benchmark Dataset and Baselines},

Booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

Year = {2015}

}